Is It Time To talk More ABout Deepseek?

페이지 정보

본문

The DeepSeek MLA optimizations have been contributed by Ke Bao and Yineng Zhang. Benchmark outcomes present that SGLang v0.Three with MLA optimizations achieves 3x to 7x greater throughput than the baseline system. Multi-head Latent Attention (MLA) is a new consideration variant introduced by the DeepSeek team to improve inference efficiency. The interleaved window attention was contributed by Ying Sheng. The torch.compile optimizations had been contributed by Liangsheng Yin. To make use of torch.compile in SGLang, add --allow-torch-compile when launching the server. Deepseek’s official API is compatible with OpenAI’s API, so simply need so as to add a new LLM underneath admin/plugins/discourse-ai/ai-llms. I’d say this save me atleast 10-quarter-hour of time googling for the api documentation and fumbling till I acquired it right. I assume @oga desires to use the official Deepseek API service instead of deploying an open-source model on their own. I assume that almost all people who still use the latter are newbies following tutorials that have not been up to date but or presumably even ChatGPT outputting responses with create-react-app as a substitute of Vite. That evening he dreamed of a voice in his room that requested him who he was and what he was doing. DBRX 132B, companies spend $18M avg on LLMs, OpenAI Voice Engine, and far more!

While encouraging, there remains to be much room for improvement. On FRAMES, a benchmark requiring question-answering over 100k token contexts, DeepSeek-V3 intently trails GPT-4o whereas outperforming all other fashions by a significant margin. Those are readily out there, even the mixture of experts (MoE) models are readily accessible. We're actively collaborating with the torch.compile and torchao groups to include their newest optimizations into SGLang. We activate torch.compile for batch sizes 1 to 32, where we observed the most acceleration. With this combination, SGLang is quicker than gpt-quick at batch dimension 1 and helps all on-line serving options, together with continuous batching and RadixAttention for prefix caching. You possibly can launch a server and question it using the OpenAI-suitable imaginative and prescient API, which supports interleaved textual content, multi-image, and video codecs. LLaVA-OneVision is the first open model to attain state-of-the-art efficiency in three vital laptop vision situations: single-image, multi-image, and video tasks. DeepSeek-V3 achieves the best efficiency on most benchmarks, particularly on math and code tasks.

While encouraging, there remains to be much room for improvement. On FRAMES, a benchmark requiring question-answering over 100k token contexts, DeepSeek-V3 intently trails GPT-4o whereas outperforming all other fashions by a significant margin. Those are readily out there, even the mixture of experts (MoE) models are readily accessible. We're actively collaborating with the torch.compile and torchao groups to include their newest optimizations into SGLang. We activate torch.compile for batch sizes 1 to 32, where we observed the most acceleration. With this combination, SGLang is quicker than gpt-quick at batch dimension 1 and helps all on-line serving options, together with continuous batching and RadixAttention for prefix caching. You possibly can launch a server and question it using the OpenAI-suitable imaginative and prescient API, which supports interleaved textual content, multi-image, and video codecs. LLaVA-OneVision is the first open model to attain state-of-the-art efficiency in three vital laptop vision situations: single-image, multi-image, and video tasks. DeepSeek-V3 achieves the best efficiency on most benchmarks, particularly on math and code tasks.

We used the accuracy on a selected subset of the MATH test set because the analysis metric. Because it performs higher than Coder v1 && LLM v1 at NLP / Math benchmarks. Torch.compile is a significant characteristic of PyTorch 2.0. On NVIDIA GPUs, it performs aggressive fusion and generates extremely environment friendly Triton kernels. We enhanced SGLang v0.3 to completely support the 8K context size by leveraging the optimized window attention kernel from FlashInfer kernels (which skips computation as an alternative of masking) and refining our KV cache manager. We've built-in torch.compile into SGLang for linear/norm/activation layers, combining it with FlashInfer consideration and sampling kernels. As a consequence of its variations from customary attention mechanisms, existing open-source libraries have not totally optimized this operation. Except for commonplace techniques, vLLM gives pipeline parallelism permitting you to run this mannequin on a number of machines linked by networks. Note that for each MTP module, its embedding layer is shared with the principle mannequin. Note that the GPTQ calibration dataset is just not the same because the dataset used to train the mannequin - please consult with the unique model repo for particulars of the training dataset(s). The LLM was skilled on a big dataset of two trillion tokens in each English and Chinese, employing architectures reminiscent of LLaMA and Grouped-Query Attention.

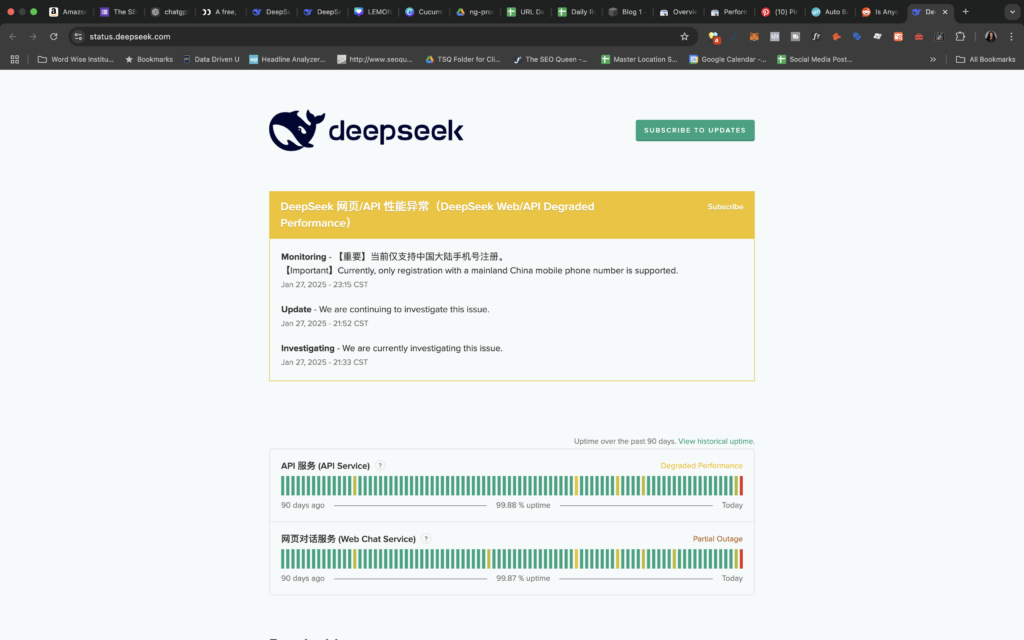

Google's Gemma-2 mannequin uses interleaved window consideration to cut back computational complexity for lengthy contexts, alternating between native sliding window consideration (4K context size) and world attention (8K context size) in each different layer. Recently, Alibaba, the chinese language tech giant additionally unveiled its own LLM known as Qwen-72B, which has been trained on excessive-high quality information consisting of 3T tokens and likewise an expanded context window size of 32K. Not simply that, the corporate also added a smaller language mannequin, Qwen-1.8B, touting it as a gift to the analysis community. Say hello to DeepSeek R1-the AI-powered platform that’s altering the principles of information analytics! Singlestore is an all-in-one data platform to build AI/ML functions. You'll need to join a free account on the DeepSeek website so as to use it, however the company has temporarily paused new sign ups in response to "large-scale malicious attacks on DeepSeek’s providers." Existing users can sign in and use the platform as regular, but there’s no word but on when new customers will have the ability to attempt DeepSeek for themselves. Claude 3.5 Sonnet has proven to be among the finest performing models available in the market, and is the default mannequin for our Free and Pro users.

When you loved this informative article and you would love to receive more information about deep seek assure visit our own site.

- 이전글See What Modern Wall Hung Electric Fires Tricks The Celebs Are Making Use Of 25.01.31

- 다음글What Month Was 9 Months Ago Today Smackdown! 25.01.31

댓글목록

등록된 댓글이 없습니다.