Marriage And Deepseek Have More In Common Than You Assume

페이지 정보

본문

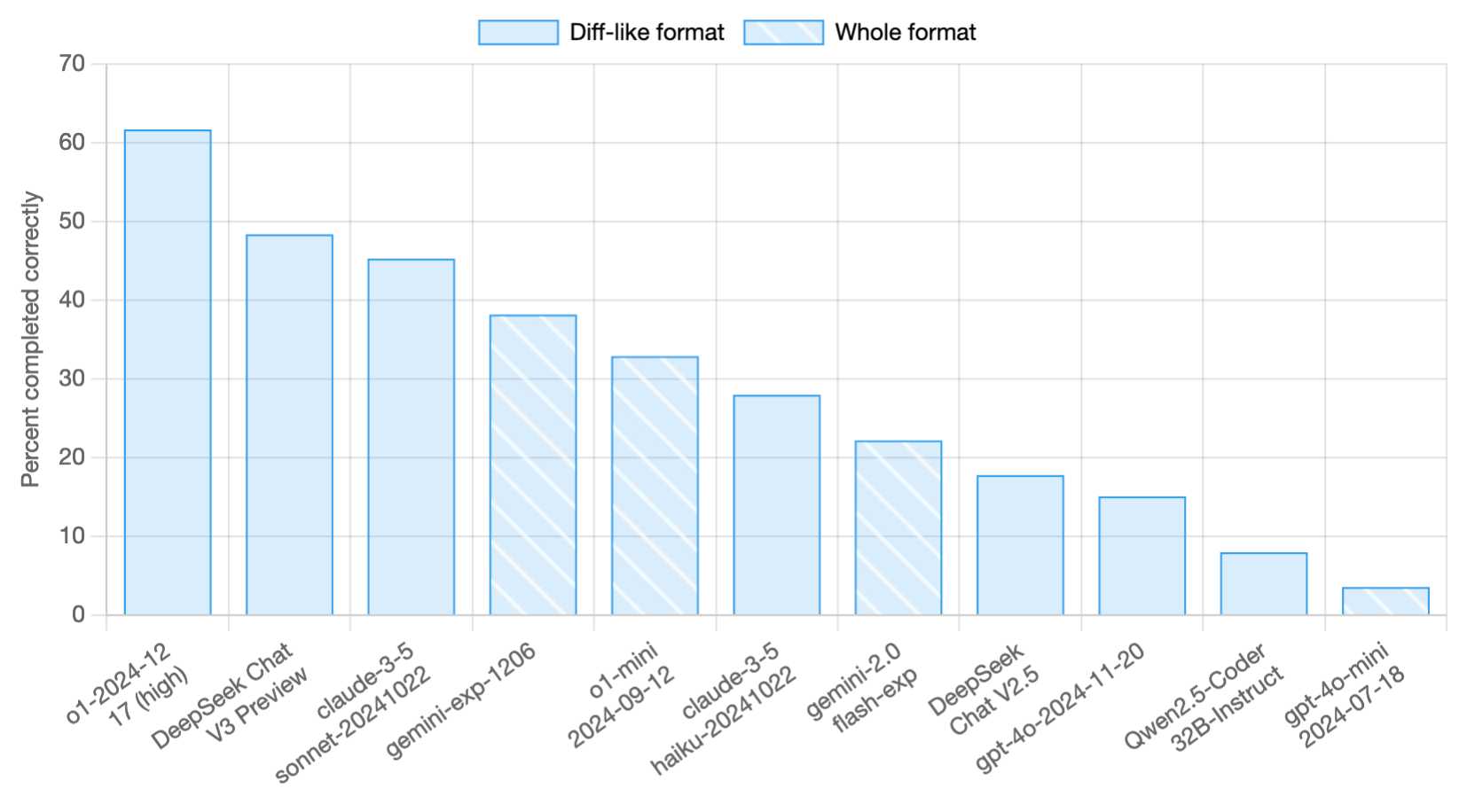

DeepSeek enables hyper-personalization by analyzing consumer behavior and preferences. Like every laboratory, DeepSeek absolutely has different experimental items going in the background too. They should consider five classes: 1) we’re transferring from models that acknowledge patterns to those that may motive, 2) the economics of AI are at an inflection level, 3) the current second exhibits how propriety and open source models can coexist, 4) silicon scarcity drives innovation, and 5) in spite of the splash DeepSeek made with this model, it didn’t change every little thing, and issues like proprietary models’ advantages over open supply are nonetheless in place. The following plot shows the share of compilable responses over all programming languages (Go and Java). DROP: A studying comprehension benchmark requiring discrete reasoning over paragraphs. You'll be able to turn on each reasoning and internet search to inform your answers. Deepseekmath: Pushing the limits of mathematical reasoning in open language fashions. GPT-4o: That is the latest version of the properly-identified GPT language household.

Similarly, Baichuan adjusted its solutions in its web model. DeepSeek site-AI (2024a) DeepSeek-AI. Deepseek-coder-v2: Breaking the barrier of closed-supply fashions in code intelligence. The paper introduces DeepSeek-Coder-V2, a novel approach to breaking the barrier of closed-supply models in code intelligence. With a concentrate on protecting purchasers from reputational, financial and political hurt, DeepSeek uncovers emerging threats and risks, and delivers actionable intelligence to assist guide purchasers through difficult situations. This qualitative leap in the capabilities of DeepSeek LLMs demonstrates their proficiency throughout a big selection of purposes. It highlights the key contributions of the work, together with developments in code understanding, era, and modifying capabilities. Its lightweight design maintains powerful capabilities across these diverse programming functions, made by Google. The mannequin is deployed in an AWS safe environment and below your virtual personal cloud (VPC) controls, serving to to support knowledge safety. Also note should you shouldn't have enough VRAM for the scale model you are using, you might find utilizing the mannequin truly ends up using CPU and swap. How they’re skilled: The agents are "trained by way of Maximum a-posteriori Policy Optimization (MPO)" policy. One large advantage of the new protection scoring is that outcomes that solely obtain partial coverage are still rewarded.

Moreover, compute benchmarks that define the state-of-the-art are a transferring needle. Because it performs higher than Coder v1 && LLM v1 at NLP / Math benchmarks. DeepSeek, developed by a Chinese analysis lab backed by High Flyer Capital Management, managed to create a aggressive massive language model (LLM) in just two months using much less powerful GPUs, specifically Nvidia’s H800, at a value of solely $5.5 million. 바로 직후인 2023년 11월 29일, DeepSeek LLM 모델을 발표했는데, 이 모델을 ‘차세대의 오픈소스 LLM’이라고 불렀습니다. 바로 이어서 2024년 2월, 파라미터 7B개의 전문화 모델, DeepSeekMath를 출시했습니다. The cause of this identification confusion seems to come all the way down to coaching data. Microscaling information formats for deep studying. FP8 formats for deep studying. Scaling FP8 coaching to trillion-token llms. Most LLMs write code to access public APIs very properly, but wrestle with accessing non-public APIs. Livecodebench: Holistic and contamination free evaluation of large language fashions for code. Fact, fetch, and motive: A unified analysis of retrieval-augmented era.

Moreover, compute benchmarks that define the state-of-the-art are a transferring needle. Because it performs higher than Coder v1 && LLM v1 at NLP / Math benchmarks. DeepSeek, developed by a Chinese analysis lab backed by High Flyer Capital Management, managed to create a aggressive massive language model (LLM) in just two months using much less powerful GPUs, specifically Nvidia’s H800, at a value of solely $5.5 million. 바로 직후인 2023년 11월 29일, DeepSeek LLM 모델을 발표했는데, 이 모델을 ‘차세대의 오픈소스 LLM’이라고 불렀습니다. 바로 이어서 2024년 2월, 파라미터 7B개의 전문화 모델, DeepSeekMath를 출시했습니다. The cause of this identification confusion seems to come all the way down to coaching data. Microscaling information formats for deep studying. FP8 formats for deep studying. Scaling FP8 coaching to trillion-token llms. Most LLMs write code to access public APIs very properly, but wrestle with accessing non-public APIs. Livecodebench: Holistic and contamination free evaluation of large language fashions for code. Fact, fetch, and motive: A unified analysis of retrieval-augmented era.

C-Eval: A multi-degree multi-discipline chinese analysis suite for foundation fashions. Massive activations in large language fashions. So after I discovered a model that gave quick responses in the precise language. His second obstacle is ‘underinvestment in humans’ and to spend money on ‘training and schooling.’ People must be taught to use the new AI instruments ‘the right means.’ This can be a sure mindset’s reply for every part. Yet, nicely, the stramwen are actual (within the replies). True, I´m guilty of mixing actual LLMs with transfer studying. Learning and Education: LLMs will likely be a great addition to training by offering personalized studying experiences. If we're talking about small apps, proof of concepts, Vite's great. It’s like, okay, you’re already ahead as a result of you've gotten extra GPUs. Higher clock speeds also improve immediate processing, so goal for 3.6GHz or extra. Gptq: Accurate post-coaching quantization for generative pre-skilled transformers. A straightforward strategy is to use block-wise quantization per 128x128 parts like the way we quantize the mannequin weights. Others demonstrated easy but clear examples of advanced Rust utilization, like Mistral with its recursive approach or Stable Code with parallel processing. Lots can go flawed even for such a easy instance. The open source generative AI motion may be troublesome to stay atop of - even for these working in or covering the field equivalent to us journalists at VenturBeat.

In case you loved this post and you would love to receive details concerning ديب سيك kindly visit our web site.

- 이전글비아그라 인터넷정품판매 레비트라 끊는법 25.02.07

- 다음글You'll Never Guess This Calor Gas Patio Heater's Tricks 25.02.07

댓글목록

등록된 댓글이 없습니다.