To Click on Or To not Click on: Deepseek And Blogging

페이지 정보

본문

U.S. congressional workplaces have reportedly been warned not to use DeepSeek tech. Early testers report it delivers large outputs whereas maintaining power calls for surprisingly low-a not-so-small advantage in a world obsessive about inexperienced tech. AI, consultants warn quite emphatically, would possibly quite actually take management of the world from humanity if we do a bad job of designing billions of super-smart, super-highly effective AI brokers that act independently in the world. Take healthcare for instance. Increasingly, industries are demanding AI programs that cater to their unique challenges-systems that do more than "talk smart" and actually resolve issues in actual, measurable ways. Upon completing the RL coaching section, we implement rejection sampling to curate high-high quality SFT data for the final model, the place the professional fashions are used as knowledge technology sources. This stage used 1 reward mannequin, educated on compiler suggestions (for coding) and floor-fact labels (for math). The AI assistant is powered by the startup’s "state-of-the-art" DeepSeek-V3 mannequin, allowing customers to ask questions, plan trips, generate textual content, and more. Slightly completely different from DeepSeek-V2, DeepSeek-V3 uses the sigmoid function to compute the affinity scores, and applies a normalization amongst all selected affinity scores to supply the gating values.

Powered by the DeepSeek-V3 mannequin. DeepSeek launched details earlier this month on R1, the reasoning mannequin that underpins its chatbot. As well as, it has a software drawer that to visualize the reasoning that the bot follows to achieve the answer (known as "deep thinking") and activate the search function. Excels in each English and Chinese language tasks, in code era and mathematical reasoning. While older AI systems give attention to solving remoted issues, Deepseek excels the place a number of inputs collide. Which means while DeepSeek v3 has 671 billion parameters in total, it activates only 37 billion at any given time. Utilizing a Mixture-of-Experts (MoE) structure, this model boasts an impressive 671 billion parameters, with only 37 billion activated per token, permitting for environment friendly processing and excessive-quality output across a variety of duties. DeepSeek V3 and DeepSeek V2.5 use a Mixture of Experts (MoE) architecture, whereas Qwen2.5 and Llama3.1 use a Dense structure. Free for business use and fully open-supply. Probably the most remarkable side of this growth is that DeepSeek has totally open-sourced the R1 model under the MIT license, making it freely available for each commercial and academic functions. Open Source: MIT-licensed weights, 1.5B-70B distilled variants for industrial use.

Powered by the DeepSeek-V3 mannequin. DeepSeek launched details earlier this month on R1, the reasoning mannequin that underpins its chatbot. As well as, it has a software drawer that to visualize the reasoning that the bot follows to achieve the answer (known as "deep thinking") and activate the search function. Excels in each English and Chinese language tasks, in code era and mathematical reasoning. While older AI systems give attention to solving remoted issues, Deepseek excels the place a number of inputs collide. Which means while DeepSeek v3 has 671 billion parameters in total, it activates only 37 billion at any given time. Utilizing a Mixture-of-Experts (MoE) structure, this model boasts an impressive 671 billion parameters, with only 37 billion activated per token, permitting for environment friendly processing and excessive-quality output across a variety of duties. DeepSeek V3 and DeepSeek V2.5 use a Mixture of Experts (MoE) architecture, whereas Qwen2.5 and Llama3.1 use a Dense structure. Free for business use and fully open-supply. Probably the most remarkable side of this growth is that DeepSeek has totally open-sourced the R1 model under the MIT license, making it freely available for each commercial and academic functions. Open Source: MIT-licensed weights, 1.5B-70B distilled variants for industrial use.

As extra businesses undertake the platform, delivering constant efficiency across diverse use cases-whether or not it’s predicting inventory developments or diagnosing health circumstances-becomes a massive logistical balancing act. Finance: Analyzing decades of monetary developments for forecasting and choice-making. Its true power lies in how naturally it plays in arenas like knowledge forecasting, enterprise intelligence, and even custom decision-making. Deepseek can chew on vendor knowledge, market sentiment, and even wildcard variables like weather patterns-all on the fly-spitting out insights that wouldn’t look out of place in a company boardroom PowerPoint. Master weights and gradients are even saved in fp32. Finance and e-commerce follow the same thread: predictive models that are fine-tuned for industry variables rather than generic algorithms stretched too skinny. The architecture was basically the identical because the Llama sequence. As well as, it doesn't have a built-in image generation operate and nonetheless throws some processing issues. First, they fine-tuned the DeepSeekMath-Base 7B model on a small dataset of formal math issues and their Lean 4 definitions to obtain the initial model of DeepSeek-Prover, their LLM for proving theorems.

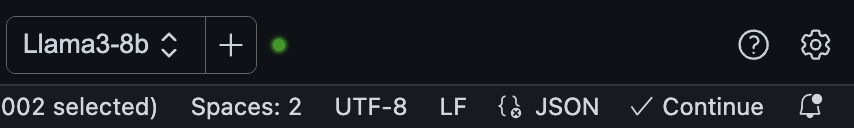

Click the Model tab. The rule-primarily based reward model was manually programmed. On April 28, 2023, ChatGPT was restored in Italy and OpenAI mentioned it had "addressed or clarified" the problems raised by the Garante. However, the important thing distinction is that Western firms comparable to OpenAI (ChatGPT) and Google (Gemini) have extra authorized avenues to explore when resisting information requests from authorities. However, compute, the time period for the bodily hardware that powers algorithms, is way simpler to govern. Briefly, Deepseek AI isn’t chasing the AI gold rush to be "the next big factor." It’s carving out its own niche whereas making different instruments look a little bit… Briefly, Deepseek is fast, efficient, and versatile, setting itself apart in the AI panorama. The AI landscape is continually evolving, with new players getting into the scene and reshaping the conversation. The times of common-function AI dominating every dialog are winding down. Deepseek's touted benefits-contextual understanding, speed, efficiency-are spectacular, however its rivals are solely a breakthrough or two away from neutralizing these distinctions. Liang went on to ascertain two extra firms targeted on pc-directed funding - Hangzhou Huanfang Technology Co and Ningbo Huanfang Quantitative Investment Management Partnership - in 2015 and 2016, respectively. Additionally, we leverage the IBGDA (NVIDIA, 2022) technology to additional minimize latency and enhance communication efficiency.

If you loved this article and you also would like to collect more info relating to شات ديب سيك i implore you to visit our own webpage.

- 이전글종근당야일라가격 프릴리지가격, 25.02.10

- 다음글7 Things You've Never Learned About Adult Diagnosis Of ADHD 25.02.10

댓글목록

등록된 댓글이 없습니다.