Deepseek Adventures

페이지 정보

본문

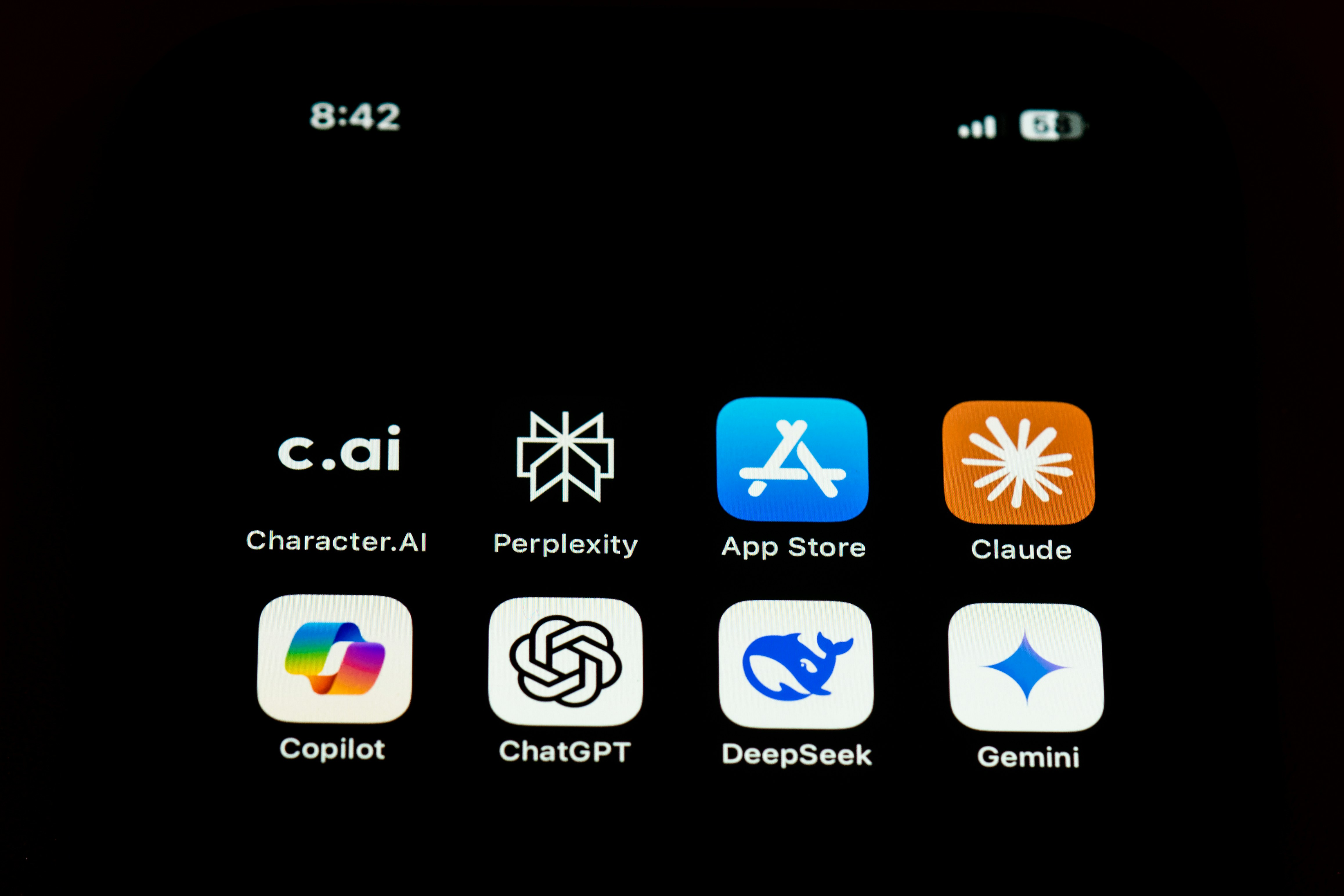

One mannequin acts as the principle mannequin, while the others act as MTP modules. Although it provides layers of complexity, the MTP approach is important for improving the model's performance throughout totally different duties. Its performance in English tasks confirmed comparable results with Claude 3.5 Sonnet in a number of benchmarks. It is not simple to find an app that gives correct and AI-powered search outcomes for analysis, news, and normal queries. This suggestions is used to update the agent's policy and information the Monte-Carlo Tree Search process. Sites publishing misleading, AI-generated, or low-quality content danger demotion in search rankings. Also, as you'll be able to see in the visualization above, DeepSeek V3 designed sure experts to be "shared experts," and these experts are all the time lively for varied duties. As you possibly can see from the figure above, the approach jointly compresses key and value together into their low-rank representation. As you'll be able to see from the picture above, this method is applied in DeepSeek V3 as a replacement for the original feed-forward community within the Transformers block. In both text and picture technology, we have seen large step-operate like enhancements in model capabilities throughout the board. Cost disruption. DeepSeek claims to have developed its R1 model for less than $6 million.

It is as if we're explorers and we have discovered not simply new continents, but a hundred completely different planets, they stated. "In the primary stage, two separate experts are skilled: one which learns to rise up from the bottom and one other that learns to score in opposition to a hard and fast, random opponent. Another fascinating method implemented inside DeepSeek V3 is the Mixture of Experts (MoE) method. Through the coaching section, each model gets totally different knowledge from a particular domain, such that they develop into experts in fixing duties from that domain. During the coaching section, each the primary mannequin and MTP modules take enter from the same embedding layer. After predicting the tokens, both the principle mannequin and MTP modules will use the same output head. Whether you're a developer trying to integrate Deepseek into your initiatives or a business leader in search of to achieve a aggressive edge, this guide will give you the data and finest practices to succeed. Consequently, DeepSeek V3 demonstrated the perfect performance compared to others on Arena-Hard and AlpacaEval 2.Zero benchmarks.

It is as if we're explorers and we have discovered not simply new continents, but a hundred completely different planets, they stated. "In the primary stage, two separate experts are skilled: one which learns to rise up from the bottom and one other that learns to score in opposition to a hard and fast, random opponent. Another fascinating method implemented inside DeepSeek V3 is the Mixture of Experts (MoE) method. Through the coaching section, each model gets totally different knowledge from a particular domain, such that they develop into experts in fixing duties from that domain. During the coaching section, each the primary mannequin and MTP modules take enter from the same embedding layer. After predicting the tokens, both the principle mannequin and MTP modules will use the same output head. Whether you're a developer trying to integrate Deepseek into your initiatives or a business leader in search of to achieve a aggressive edge, this guide will give you the data and finest practices to succeed. Consequently, DeepSeek V3 demonstrated the perfect performance compared to others on Arena-Hard and AlpacaEval 2.Zero benchmarks.

As you'll be able to think about, by looking at attainable future tokens a number of steps ahead in a single decoding step, the model is able to be taught the absolute best solution for any given task. Washington faces a daunting but crucial job. This method makes inference quicker and extra efficient, since only a small number of knowledgeable fashions shall be activated during prediction, relying on the duty. This strategy introduces a bias term to every knowledgeable model that might be dynamically adjusted relying on the routing load of the corresponding professional. However, the implementation still needs to be completed in sequence, i.e., the principle model should go first by predicting the token one step forward, and after that, the first MTP module will predict the token two steps ahead. Common LLMs predict one token in each decoding step, but DeepSeek V3 operates in a different way, especially in its training section. Implementing an auxiliary loss helps to pressure the gating network to learn to distribute the training knowledge to completely different fashions.

In the event you loved this article as well as you would like to be given more details with regards to ديب سيك i implore you to pay a visit to the web site.

- 이전글7 Things About Porsche Key Battery You'll Kick Yourself For Not Knowing 25.02.13

- 다음글9 Signs You're A Replacement Porsche Keys Expert 25.02.13

댓글목록

등록된 댓글이 없습니다.