A hundred and one Ideas For Deepseek

페이지 정보

본문

We thank (alphabetically) the DeepSeek crew, Hugging Face crew, SGLang group, TensorRT-LLM group, vLLM workforce, and WebLLM team for their helpful feedback and discussions. We are dedicated to our mission of bringing zero-overhead flexible structured technology to everybody and warmly welcome feedback and contributions from the neighborhood. Through these optimizations, we obtain both accuracy and effectivity without compromise, fulfilling our goal of flexible and environment friendly structured era. In all cases, XGrammar permits excessive-performance era in both settings with out compromising flexibility and effectivity. For end-to-finish evaluation, we benchmarked the LLM inference engine efficiency in serving scenarios with different batch sizes. We also benchmarked llama-cpp’s built-in grammar engine (b3998) and lm-format-enforcer (v0.10.9, lm-format-enforcer has no CFG help). However, this model cannot be thought-about totally a community-driven challenge as it receives vital support from DeepSeek itself. Considered one of the largest limitations on inference is the sheer quantity of memory required: you each need to load the model into memory and also load the complete context window. For the reason that MoE half only must load the parameters of one professional, the reminiscence access overhead is minimal, so utilizing fewer SMs is not going to significantly affect the general performance.

The above optimizations assist us reduce the overall overhead of grammar execution. XGrammar solves the above challenges and gives full and environment friendly assist for context-free Deep seek grammar in LLM structured era by way of a series of optimizations. Pushdown automata structure optimizations. Persistent execution stack. To hurry up the maintenance of multiple parallel stacks during splitting and merging because of multiple doable growth paths, we design a tree-primarily based information structure that effectively manages multiple stacks together. DeepSeek CEO Liang Wenfeng, also the founding father of High-Flyer - a Chinese quantitative fund and DeepSeek’s primary backer - recently met with Chinese Premier Li Qiang, where he highlighted the challenges Chinese companies face due to U.S. Our major perception is that though we can't precompute complete masks for infinitely many states of the pushdown automaton, a major portion (often greater than 99%) of the tokens in the mask can be precomputed in advance. Moreover, we want to maintain a number of stacks in the course of the execution of the PDA, whose number will be up to dozens. DeepSeek-R1 was vastly disruptive when it first debuted, for numerous reasons - certainly one of which was the implication that a leading edge open-supply reasoning model may very well be built and deployed with less infrastructure than a proprietary mannequin.

The above optimizations assist us reduce the overall overhead of grammar execution. XGrammar solves the above challenges and gives full and environment friendly assist for context-free Deep seek grammar in LLM structured era by way of a series of optimizations. Pushdown automata structure optimizations. Persistent execution stack. To hurry up the maintenance of multiple parallel stacks during splitting and merging because of multiple doable growth paths, we design a tree-primarily based information structure that effectively manages multiple stacks together. DeepSeek CEO Liang Wenfeng, also the founding father of High-Flyer - a Chinese quantitative fund and DeepSeek’s primary backer - recently met with Chinese Premier Li Qiang, where he highlighted the challenges Chinese companies face due to U.S. Our major perception is that though we can't precompute complete masks for infinitely many states of the pushdown automaton, a major portion (often greater than 99%) of the tokens in the mask can be precomputed in advance. Moreover, we want to maintain a number of stacks in the course of the execution of the PDA, whose number will be up to dozens. DeepSeek-R1 was vastly disruptive when it first debuted, for numerous reasons - certainly one of which was the implication that a leading edge open-supply reasoning model may very well be built and deployed with less infrastructure than a proprietary mannequin.

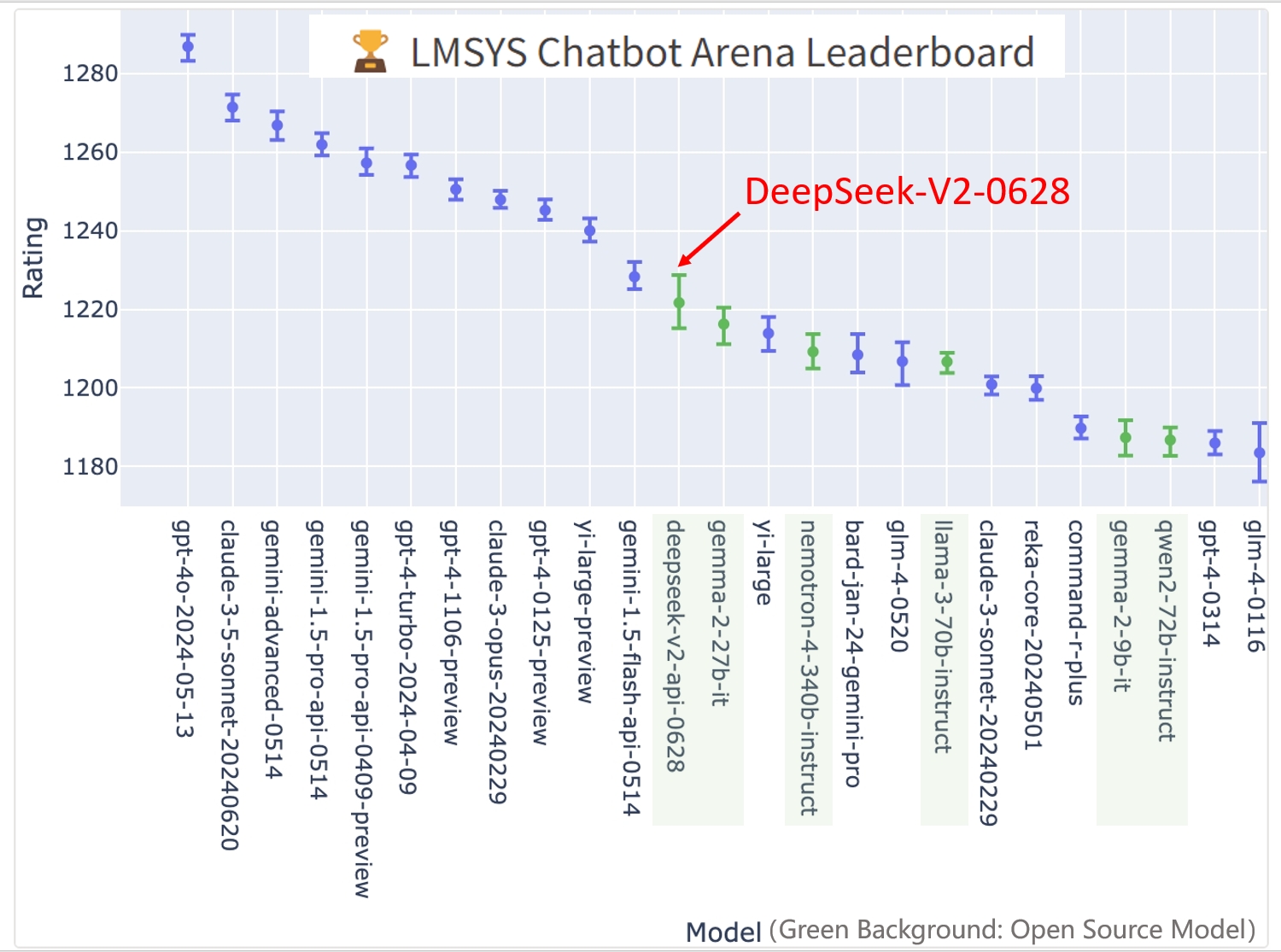

We first evaluate the pace of masking logits. DeepSeek-Coder-V2 is the primary open-source AI mannequin to surpass GPT4-Turbo in coding and math, which made it one of the crucial acclaimed new fashions. DeepSeek, a one-12 months-old startup, revealed a gorgeous functionality final week: It introduced a ChatGPT-like AI mannequin known as R1, which has all of the acquainted abilities, working at a fraction of the cost of OpenAI’s, Google’s or Meta’s standard AI fashions. How to put in Google’s Gemini Live AI in Windows eleven Pc as default? Nvidia (NVDA), the leading supplier of AI chips, fell almost 17% and misplaced $588.8 billion in market worth - by far the most market value a inventory has ever lost in a single day, more than doubling the previous report of $240 billion set by Meta nearly three years ago. The tech-heavy Nasdaq plunged by 3.1% and the broader S&P 500 fell 1.5%. The Dow, boosted by health care and shopper companies that may very well be harm by AI, was up 289 points, or about 0.7% greater.

We first evaluate the pace of masking logits. DeepSeek-Coder-V2 is the primary open-source AI mannequin to surpass GPT4-Turbo in coding and math, which made it one of the crucial acclaimed new fashions. DeepSeek, a one-12 months-old startup, revealed a gorgeous functionality final week: It introduced a ChatGPT-like AI mannequin known as R1, which has all of the acquainted abilities, working at a fraction of the cost of OpenAI’s, Google’s or Meta’s standard AI fashions. How to put in Google’s Gemini Live AI in Windows eleven Pc as default? Nvidia (NVDA), the leading supplier of AI chips, fell almost 17% and misplaced $588.8 billion in market worth - by far the most market value a inventory has ever lost in a single day, more than doubling the previous report of $240 billion set by Meta nearly three years ago. The tech-heavy Nasdaq plunged by 3.1% and the broader S&P 500 fell 1.5%. The Dow, boosted by health care and shopper companies that may very well be harm by AI, was up 289 points, or about 0.7% greater.

Multi-Layered Learning: Instead of utilizing traditional one-shot AI, DeepSeek r1 employs multi-layer studying to contend with advanced interconnected issues. Uses deep studying to establish patterns and developments. US stocks dropped sharply Monday - and chipmaker Nvidia misplaced almost $600 billion in market worth - after a surprise advancement from a Chinese artificial intelligence company, DeepSeek, threatened the aura of invincibility surrounding America’s technology trade. For perspective, Nvidia lost more in market value Monday than all but 13 corporations are value - period. Nvidia began the day because the most dear publicly traded stock available on the market - over $3.4 trillion - after its shares greater than doubled in every of the previous two years. The DeepSeek startup is lower than two years outdated-it was founded in 2023 by 40-yr-old Chinese entrepreneur Liang Wenfeng-and launched its open-supply fashions for download within the United States in early January, the place it has since surged to the highest of the iPhone download charts, surpassing the app for OpenAI’s ChatGPT. The DeepSeek app is accessible for Android units and will be downloaded without spending a dime from the Google Play Store.

- 이전글It's The One Best Robot Cleaner Trick Every Person Should Be Able To 25.02.24

- 다음글You'll Never Be Able To Figure Out This Private Psychiatrist London UK's Tricks 25.02.24

댓글목록

등록된 댓글이 없습니다.